What Nobody Tells You About Arrests Org Face Search: Uncovering the Hidden Mechanics Behind Digital Policing

What Nobody Tells You About Arrests Org Face Search: Uncovering the Hidden Mechanics Behind Digital Policing

A face search in law enforcement contexts—often tied to underground groups, activist collectives, or unidentified suspects—represents one of the most contentious and technically sophisticated tools in modern surveillance. What Nobody Tells You About Arrests Org Face Search reveals a complex ecosystem where public databases, biometric algorithms, and informal policing networks intersect, operating far from public scrutiny. While the use of facial recognition in official arrests is widely debated, the behind-the-scenes mechanics—especially how face search databases are built, accessed, and triggered—remain shrouded in mystery, raising urgent questions about privacy, accountability, and civil liberties.

The core technology behind most law enforcement face searches stems from automated facial recognition (AFR) systems trained on vast datasets drawn from public records, social media, and previously collected images. These systems scan video footage, phone clips, or even social media posts during incidents, comparing facial features against suspect databases maintained by police organs and intelligence units. No one should assume that a simple face match leads to immediate arrest—the process involves layers of validation, human judgment, and often inter-agency coordination.

Barring official warrants, law enforcement accesses facial recognition data through internal databases such as the Integrated Facial Recognition System (IFRS), used by agencies like the FBI’s Next Generation Identification (NGI) platform. These systems don’t operate in isolation; they rely on partnerships between federal, state, and local forces, sometimes sharing data across jurisdictions with minimal public oversight. “What people don’t realize is how fluid and decentralized the flow of facial data actually is,” notes cybersecurity analyst Dr.

Lena Cho. “Frontline units often conduct real-time searches during active operations, bypassing standard chain-of-custody protocols.”

Unlike consumer-facing face search tools, which match faces against public social profiles, law enforcement applications prioritize source imagery captured during investigations—footage from city cameras, drones, body cams, or citizen videos. The technology identifies potential matches by analyzing geometric facial landmarks, such as eye distance, jawline contours, and nasal bridge structure, generating confidence scores that determine whether an alert warrants officer attention.

A match with high confidence (typically above 80%) triggers a coordinated investigative response, often involving surveillance escalation or database cross-referencing. “These algorithms aren’t infallible,” warns expert Dr. Marcus Reed.

“False positives are not rare; they can lead to wrongful detentions or surveillance overreach when spun as immediate evidence.”

The devastation often stems not from technical failure alone, but from opacity around warrant requirements and due process. While some states mandate judicial approval before accessing facial recognition databases, others operate under “reasonable suspect” criteria, allowing pretextual searches that evade strict legal thresholds. Arrests linked to face search matches frequently cite vague charges—“suspicious presence,” “failure to identify yourself”—which can circumvent traditional evidentiary standards.

“The average person assumes a face match equals probable cause,” says privacy lawyer Elena Torres. “In reality, law enforcement treats it as a starting point—not a conclusion.”

What rarely surfaces in public discourse is the role of informal networks: local sheriff offices, surveillance task forces, and even private security firms that build and deploy custom face search tools without formal regulation. These groups often operate outside standard accountability frameworks, using proprietary software packages or open-source tools that bypass official audit trails.

“Some face search operations are run entirely on mobile devices by officers who never notify headquarters,” explains a former digital forensics specialist. “What Nobody Tells You is that these shadow systems can be just as impactful—and just as dangerous—as sanctioned police operations.”

Moreover, demographic bias embedded in facial recognition datasets deepens the ethical quagmire. Studies from NIST and the ACLU reveal significantly higher error rates for people of color, women, and non-binary individuals, amplifying risks of racial profiling.

When such flawed matches feed into arrests, the consequences compound: innocent individuals face wrongful surveillance, harassment, and criminal records based on speculative matches. Law enforcement agencies increasingly acknowledge this bias, yet most fail to mandate bias testing or transparency in their face search workflows. “We’re not immune to technology’s flaws,” admits a Department of Homeland Security spokesperson.

“But accountability, at this juncture, remains reactive rather than preventive.”

Legal challenges continue to shape how face search is deployed, with recent court rulings striking down warrantless facial recognition use in several states. These decisions underscore a growing consensus: mass surveillance tools must not erode constitutional protections. What Nobody Tells You About Arrests Org Face Search exposes the disconnect between technological capability and legal restraint, revealing a system where operational urgency often overshadows civil safeguards.

The face search pipeline—from data collection to arrest—is opaque, layered, and rife with human discretion. Legal scholars argue that without stricter oversight, anonymity, and clear standards, the line between investigation and oppression grows perilously thin.

The real power of face search lies not in its speed or precision, but in its scalability and silence—the quiet way it identifies, tracks, and potentially criminalizes individuals long before a trial begins.

Behind the arrest allegations and media headlines is a machinery built on fragmented databases, informal networks, and algorithmically derived suspicion. As surveillance expands beyond visible checkpoints into real-time digital eyes, the stakes grow higher: someone’s face seen in a crowd today might determine the course of their life tomorrow—without their knowledge, consent, or recourse. What nobody told you about arrests via face search is not just what happens during enforcement, but how a silent algorithm can initiate a descent into legal and social consequence—unseen, unchallenged, and often irreversible.

Related Post

Super Mario Level Complete: The Cadence of Cadence Roman Notes Guides the Journey

Shawn Michaels Credited For Recreating Another Famous WWE Angle For NXT Storyline

Who Is Josh Gates Dating? Inside the Romance of the Longest-Running Television Survivor

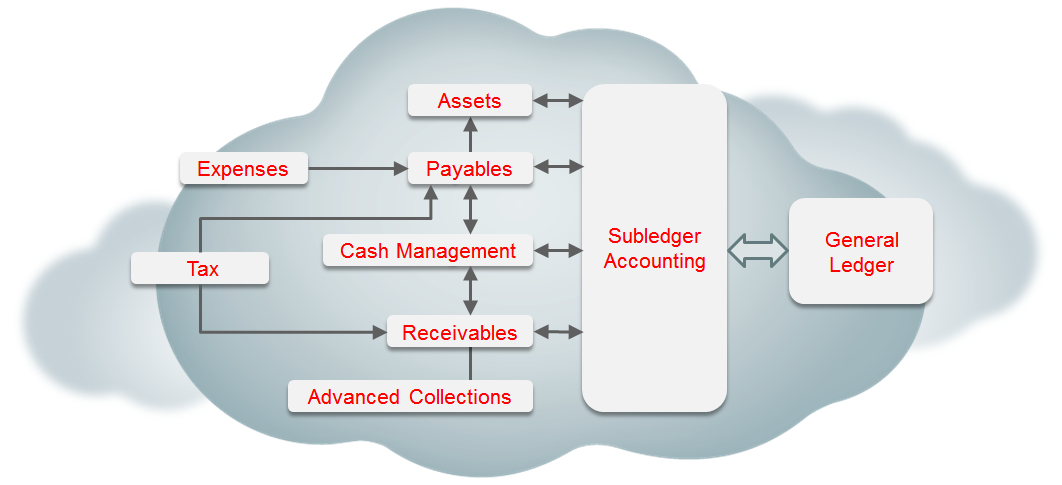

Oracle Cloud Financials: Transform Your Finance Operations with Powerful Cloud Integration