Software Vs. Hardware: The Core Battle That Defines Your Computer’s Performance

Software Vs. Hardware: The Core Battle That Defines Your Computer’s Performance

Every computer’s true potential lies not in one component alone, but in the complex interplay between software and hardware—a dynamic duo that determines speed, responsiveness, and long-term usability. While software provides the instructions that bring a system to life, hardware forms the physical foundation upon which those instructions run. Understanding the distinction and synergy between the two is essential for making informed upgrades, troubleshooting performance issues, and accessing peak efficiency.

Far more than mere technical jargon, software and hardware together define the soul of any computing experience.

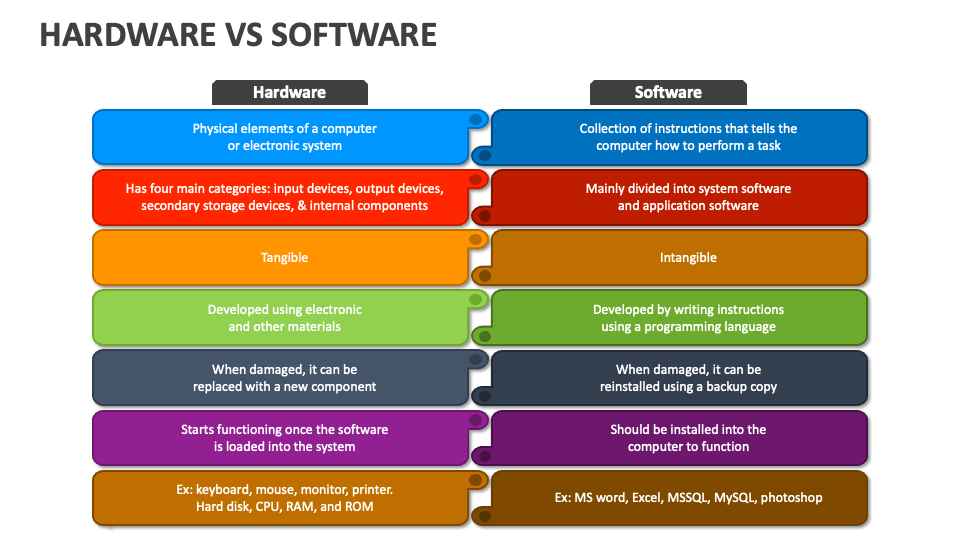

The hardware component encompasses all tangible parts inside a computer—the central processing unit (CPU), memory (RAM), storage drives, graphics processing unit (GPU), motherboard, and peripherals. These physical elements deliver raw computational power, allocate memory, process instructions, and enable data transfer.

As noted by computing expert Dr. Elena Torres, “Hardware establishes the ceiling of what a system can do; without robust physical infrastructure, even the most elegant software will falter.”

Core Functions: From Instructions to Execution

Software, by contrast, comprises the programs, operating systems, and applications that direct hardware to perform tasks. It translates user intent into machine-executable commands.The operating system, such as Windows, macOS, or Linux, manages hardware resources, ensuring seamless coordination across components. Applications—ranging from office suites to gaming engines—leverage these underlying systems to deliver specific functionalities.

One key distinction is scalability: software updates can expand capabilities without physical replacement, while hardware upgrades—like adding faster storage or a more powerful GPU—often represent the final frontier for performance gains.

Consider how a high-resolution video edit runs: software decodes video frames, applies visual effects, and renders output, while hardware accelerates rendering through specialized GPU units. Similarly, a lightweight cloud-based document editor executes smoothly on modest hardware with optimized software, whereas a resource-heavy game demands top-tier hardware to deliver decent frame rates.

This symbiotic relationship means neither component operates in isolation—though hardware may be the limiting factor in raw throughput, software dictates efficiency and user experience.

Performance Trade-Offs: Speed, Cost, and Practical Limits

Hardware typically drives immediate performance benchmarks—clockspeed, core count, memory bandwidth—all measurable with tools like CPU-Z or GPU-Z. For demanding tasks like 3D rendering or real-time ray tracing, high-end hardware remains indispensable. Benchmarks consistently show modern GPUs reduce rendering times by up to 70% while consuming similar or lower power than older models.Yet cost considerations often tip the balance.

Investing in a flagship CPU or NVMe SSD delivers measurable gains but risks diminishing returns if RAM or storage remains underpowered. Conversely, modest hardware paired with efficient, well-optimized software can offer surprisingly responsive performance in everyday use—browsing, streaming, and multitasking.

Key Metric: Thermal Design Power (TDP) reveals another critical intersection: power efficiency shapes both hardware selection and software behavior. High-TDP components consume more electricity and generate heat, demanding robust cooling—an often-overlooked factor in long-term system stability.

Clean, efficient software reduces background processes that bloat CPU and GPU usage, preserving performance and extending hardware lifespan. As tech analyst Raj Patel notes, “An optimized software stack can deliver 30% better runtime efficiency on equal hardware—proving software optimization is hardware’s silent multiplier.”

Future-Proofing: Software Optimization and Hardware Evolution

Software’s role extends beyond current capability to future adaptability. Modern operating systems and applications support hardware virtualization, containerization, and machine learning inference—features that transform legacy machines into agile, multi-use platforms.Conversely, hardware trends like PCIe 5.0, DDR5 RAM, and AMD’s Zen 5 architecture push performance boundaries, but real gains depend on software’s ability to leverage them.

DRM and API advancements further illustrate this synergy: newer APIs allow games and creative tools to access hardware acceleration—like ray tracing in DirectX 12 or Metal in macOS—delivering cinematic visuals on compatible systems. Meanwhile, software updates periodically extend hardware relevance: a mid-2010s gaming PC may still handle modern titles at low settings with driver improvements, proving software’s power to transcend physical constraints.

Yet, hardware limitations remain non-negotiable. A 6-nanometer CPU component, regardless of software refinement, cannot surpass architectural bottlenecks established by its design.

And software can only preserve user experience within hardware’s thermal, power, and physical capacity. A powerful CPU paired with inefficient, bloated software will generate excessive heat and slow execution. The ideal balance—where software runs efficiently on capable hardware—creates a responsive, efficient, and future-ready system.

Real-World Implications: What This Means for Everyday Users

For consumers, the software-hardware dynamic determines value.Upgrading memory or storage offers immediate gains without replacing entire systems—ideal for budget-conscious buyers. Enthusiasts seeking peak performance must weigh both components: choosing a high-end motherboard but pairing it with lightweight software preserves headroom for future updates. Gamers determine frame rates not just by GPU power, but by how well drivers and game engines optimize that hardware.

As silicon evolves, software must adapt to unlock true potential.

This dual dependency highlights that performance isn’t just about “more specs”—it’s about harmony between what’s built and how effectively it’s managed.

In an era of cloud computing and edge devices, the definition shifts slightly—software increasingly abstracts hardware through virtual machines and containerized environments—but the core principle endures: hardware provides the stage and scale, software directs the performance and functionality. The computer’s true core lies not in one layer, but in their intricate coordination—a partnership that shapes how we create, compute, and connect in an ever-faster digital world.

Related Post

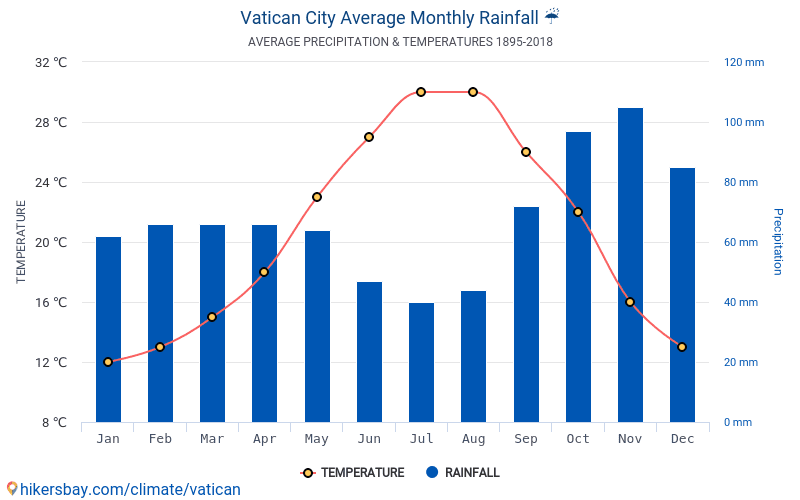

Vatican City Weather Your Ultimate Guide: Precise Insights into Rome’s Sacred Climate

Mapa Sawgrass Mills: Florida’s Premier Retail Destination Transforming Shopping in the Southeast

Unveiling Kehlani’s Real Name: The Woman Behind The Music