🚨 Mastering Alert Validation: Why Prueba De Evaluación De Alertas Works in Modern Risk Management

🚨 Mastering Alert Validation: Why Prueba De Evaluación De Alertas Works in Modern Risk Management

An effective alert system is the backbone of proactive threat detection and incident response across industries—from cybersecurity and industrial operations to healthcare and financial services. At its core lies a critical yet often underappreciated process: Prueba De Evaluación De Alertas (Test of Alert Validation). This rigorous evaluation framework ensures that every triggered alert delivers actionable, accurate, and timely intelligence—transforming raw signals into confident decision-making.

Without it, even the most sophisticated alerting infrastructure risks becoming a source of noise rather than a shield against crises. Prueba De Evaluación De Alertas is not a one-time check but a systematic process designed to measure the reliability, relevance, and timeliness of alerts generated by monitoring systems. Its role is indispensable in minimizing false positives while ensuring real threats aren’t overlooked.

As organizations increasingly rely on automated surveillance, the validation of alerts has evolved from a technical formality to a strategic imperative.

Why Alert Validation Is Non-Negotiable in High-Stakes Environments

In today’s fast-paced digital landscape, the volume of potential alerts floods operations teams daily. Studies show that average security teams face thousands of alert notifications per day, with many being irrelevant or unactionable—what experts call “alert fatigue.” A 2023 report from Gartner found that over 70% of breach investigations originate from false positives, draining valuable human and technical resources.This overwhelming noise undermines trust in alerting systems. That’s where Prueba De Evaluación De Alertas slicing through the chaos. It introduces a structured methodology to assess whether alerts are: - Contextually relevant to actual threats - Timely—triggered when actionable thresholds are breached - Accurate, with minimal false alarms - Prioritized by impact and urgency By validating these dimensions, organizations transform their alert load from a burden into a reliable early-warning engine.

Core Components of Prueba De Evaluación De Alertas

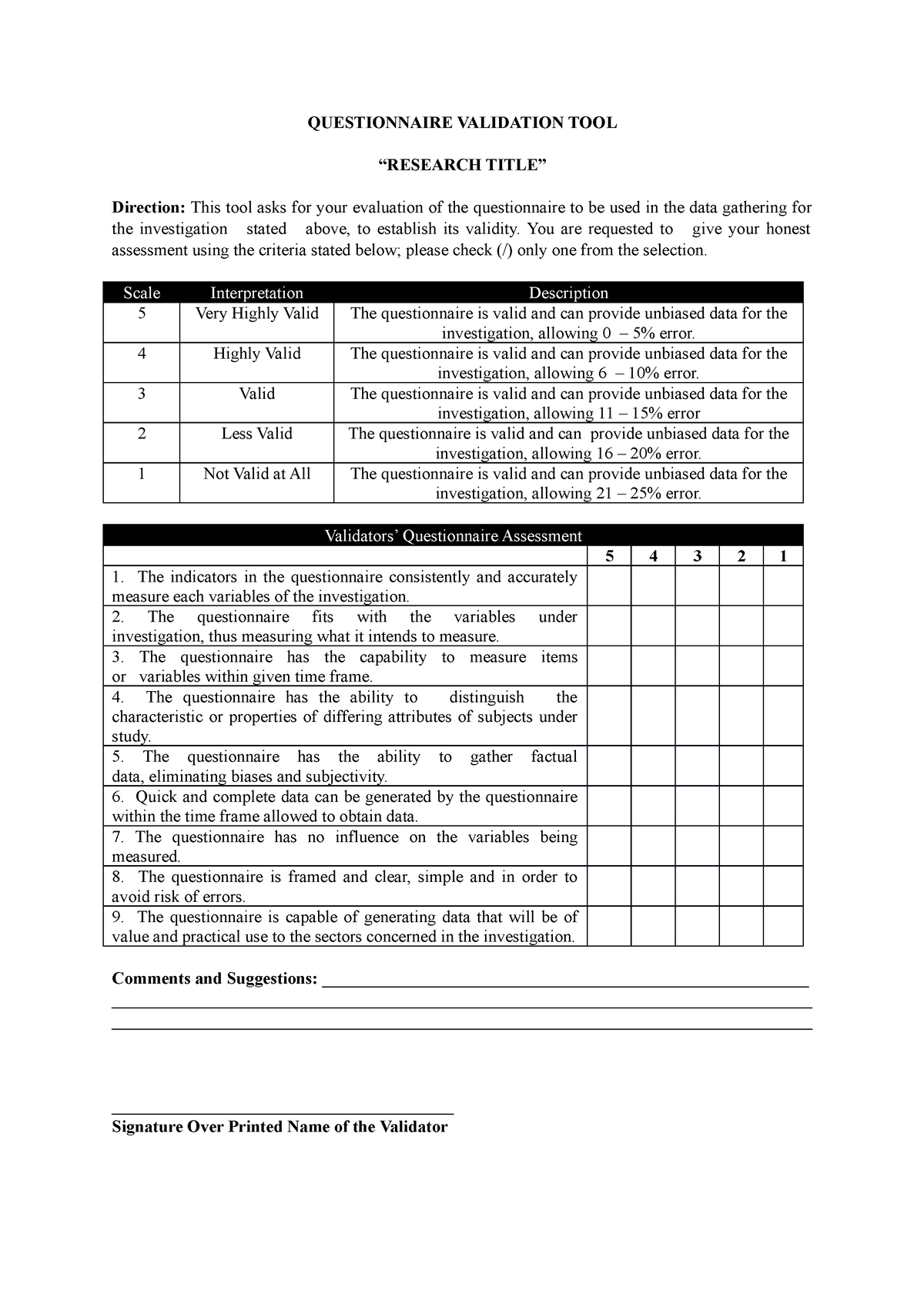

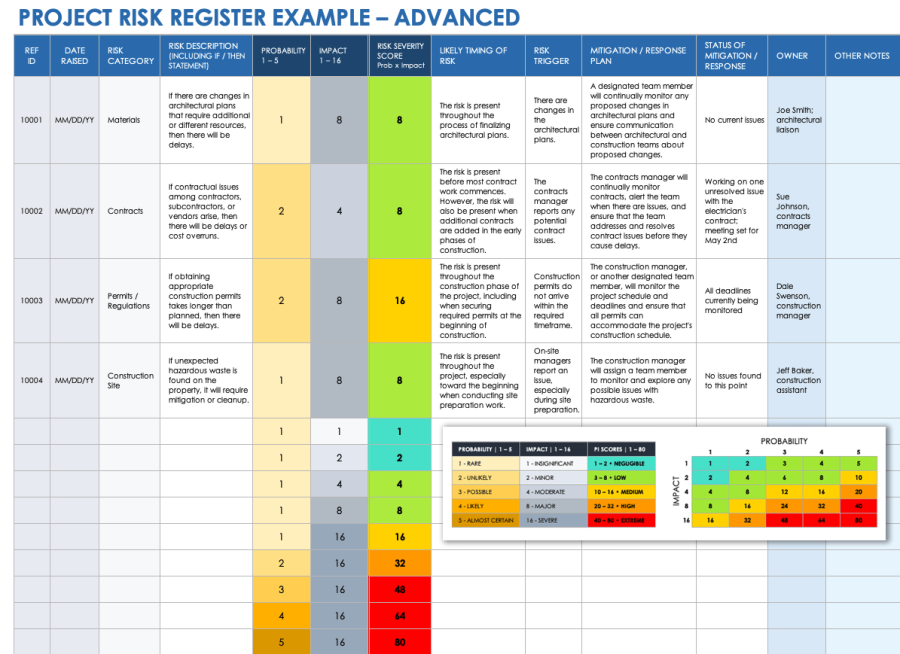

The assessment process follows a comprehensive sequence designed to expose both strengths and blind spots in alerting logic. Key phases include:- Threshold Analysis: Rigorous testing ensures alert triggers align with actual risk boundaries. Too sensitive a threshold generates noise; too lenient risks missed events.

For example, a network intrusion detection system should validate that alerts activate only when suspicious traffic exceeds established baseline behavior—not minor fluctuations.

- Contextual Relevance Evaluation: Alerts must reflect real-world threats, not artifacts. Validation cross-checks whether alerts correlate with known vulnerabilities, user behavior patterns, or geo-indicators. A login alert triggered from an IP in a high-risk region carries more weight than one from a trusted network.

- Response Time Correlation: Timeliness is critical.

The evaluation measures how quickly alerts flow from detection to notification and integration with response workflows. Delays breaching service-level objectives can render alerts obsolete by the time they reach operators.

- False Positive Ratio Measurement: Quantifying how often alerts are non-truths ensures resources focus only on meaningful signals. This metric, often expressed as a percentage, informs tuning of detection rules and machine learning models.

- Human-in-the-Loop Simulation: Real operators test alert clarity, severity tagging, and next steps.

Feedback drives refinement of alert presentation—ensuring critical insights are clear, concise, and actionable even under pressure.

Case Study: Transforming Alert Efficacy in a Financial Institution

A major European bank integrated Prueba De Evaluación De Alertas into its 24/7 operational monitoring system after experiencing recurring alert fatigue. Prior to validation, the institution faced more than 15,000 daily alerts, over 60% deemed non-actionable.After deploying the evaluation process: - Threshold tuning reduced false positives by 58% within three months - Contextual correlation improved threat detection accuracy to 92%, up from 67% - Response time for critical alerts dropped by 42%, enabling rapid containment of potential breaches - Operator feedback led to clearer, structured alert templates, cutting decision latency by 35% This transformation illustrates how disciplined validation turns alert systems from reactive noise into precision tools. The bank now treats alert validation not as a technical afterthought, but as a cornerstone of its cybersecurity resilience.

The Human-Machine Synergy Behind Effective Alert Validation While advanced analytics and AI enhance alert generation, human judgment remains irreplaceable.

Prueba De Evaluación De Alertas bridges technological output with operational reality. Subject-matter experts—security analysts, engineers, and incident managers—bring contextual awareness no algorithm fully replicates. They assess nuances like organizational risk appetite, evolving threat landscapes, and past incident patterns that automated systems often miss.

Configurable rules and adaptive thresholds complement human insight, creating a feedback loop: machine efficiency identifies signals, expert evaluation ensures relevance. This synergy prevents blind spots—ensuring alerts evolve alongside threat behavior rather than lag behind.

Implementing Prueba De Evaluación De Alertas: Best Practices Adopting this testing framework requires intentional strategy.

Organizations should: - Define clear, measurable success criteria tied to business impact - Automate routine validation tasks while preserving human oversight for complex scenarios - Schedule regular testing cycles to adapt to new threats and system changes - Integrate validation results into continuous improvement of detection logic and protocols - Train teams not just on tools, but on critical thinking—ensuring alerts are interpreted correctly under pressure Standards from ISO/IEC 27001 and NIST SP 800-90 support such integration, emphasizing testing as core to information security governance.

When properly implemented, Prueba De Evaluación De Alertas shifts alert management from chaos to control—turning data influx into decisive action. In an era where milliseconds matter, this discipline ensures alerts don’t just notify—they deliver.

This structured validation doesn’t eliminate uncertainty, but it drastically narrows the gap between detection and resolution. For leaders in high-risk sectors, embracing Prueba De Evaluación De Alertas isn’t optional; it’s the foundation of operational integrity, trust, and resilience in the digital age.

Related Post

Why Can’t I See Discord Voice Changer? Decoding the Tech Mystery Behind Missing Audio Toggles

Is The White House In Washington State A Comprehensive Guide?

Mengenal Gedung DPR RI: The Heart of Indonesia’s Legislative Will

Unlock Slope Unblocked.S3: The Revolutionary Launchpad for Unrestricted S3 Data Access