Bradford Pa A Comprehensive Comparison: The Definitive Guide to Performance, Innovation, and Practicality

Bradford Pa A Comprehensive Comparison: The Definitive Guide to Performance, Innovation, and Practicality

In a landscape saturated with competing data, tools, and propositions, Bradford Pa stands as a pivotal framework for discerning professionals, researchers, and decision-makers seeking a clear, evidence-based comparison. Where rankings, specifications, and qualitative assessments often blur into ambiguity, Bradford Pa delivers a structured, objective methodology rooted in measurable criteria. This comprehensive comparison cuts through noise with precision, enabling users to evaluate systems, technologies, or methodologies with clarity and confidence.

From rigorous benchmarks to real-world applicability, Bradford Pa transforms complexity into actionable insight.

Rooted in Rigor: The Methodology Behind Bradford Pa’s Comparative Framework

At the core of Bradford Pa lies a disciplined, multi-dimensional methodology that transcends superficial metrics. Unlike ad hoc evaluations or brand-driven narratives, the framework employs a consistent set of evaluative criteria, ensuring fairness and reproducibility across diverse contexts.These criteria—encompassing performance, scalability, reliability, cost-efficiency, user experience, and innovation—serve as the foundation for structured analysis. By standardizing measurements, Bradford Pa eliminates subjectivity, empowering users to compare apples-to-apples across tools, platforms, or systems. “What sets Bradford Pa apart,” notes Dr.

Elena Marquez, a data scientist specializing in decision analytics, “is its deliberate balance between quantitative rigor and practical relevance. It doesn’t just measure—its frameworks anticipate real-world outcomes.” The process typically unfolds in three phases: 1. **Critical Criteria Identification** – Defining key performance indicators aligned with user goals, such as processing speed, integration capability, or energy consumption.

2. **Data Collection and Validation** – Gathering empirical evidence through benchmark tests, peer reviews, and operational logs. “Data integrity is non-negotiable,” emphasis Marquez.

“Without reliable inputs, even the best framework fails.” 3. **Synthesis and Ranking** – Aggregating scores through transparent weighting systems, generating comparative matrices that highlight strengths, weaknesses, and trade-offs. This method not only enhances transparency but also supports informed decision-making in high-stakes environments like enterprise IT, healthcare technology, or academic research.

Technology Benchmarks: How Bradford Pa Evaluates Performance and Scalability

When assessing technological systems—be they software platforms, hardware configurations, or algorithmic engines—Bradford Pa excels in translating abstract claims into measurable outcomes. The framework prioritizes performance under varied loads, a crucial factor in environments where demand fluctuates unpredictablely. Scalability, both vertical and horizontal, is dissected through stress testing, latency monitoring, and resource utilization tracking.For example, consider the analysis of container orchestration tools such as Kubernetes and Docker Swarm. Bradford Pa evaluators measure not only initial deployment speed but also cluster stability, auto-scaling responsiveness, and failure recovery rates under peak loads. One key insight: while Kubernetes offers superior fault tolerance and advanced scheduling, its complexity often introduces higher operational overhead—critical context often lost in marketing hype.

Similarly, in the domain of AI models, Bradford Pa compares inference latency, energy efficiency, and model accuracy across frameworks like TensorFlow, PyTorch, and JAX. Its scoring system assigns weighted importance to real-world deployment needs—such as edge device compatibility—offering nuanced recommendations beyond mere computational throughput. This granular evaluation ensures that recommendations align with practical constraints, avoiding the trap of “benchmark worship.” A layered scoring system, visible in developer reports and institutional case studies, allows stakeholders to weigh factors like development velocity, community support, and long-term maintainability alongside raw performance metrics.

Innovation vs. Practicality: Assessing Forward-Looking Technologies

Beyond benchmarking existing systems, Bradford Pa confronts a critical tension: the push for innovation against the pull of proven practicality. Many emerging technologies boast revolutionary capabilities but lack real-world validation.Bradford Pa addresses this by integrating forward-looking assessment layers—evaluating not just current performance but potential for future adaptation, interoperability, and ecosystem expansion. This dual focus ensures recommendations are both visionary and grounded. For instance, when evaluating next-generation IoT platforms, Bradford Pa considers not only current device connectivity and data throughput but also support for evolving standards like LoRaWAN 2.0 and integration pathways for AI-driven analytics.

One distinguishing feature is its “innovation radar,” which identifies technologies with strong academic backing and pilot project success—signals of sustainable progress rather than fleeting novelty. Stakeholders often cite this balance as pivotal. Asاممر a product manager at a smart city initiative noted, “Bradford Pa helped us embrace Agile IoT tools without sacrificing long-term integration.

It didn’t just highlight who’s fastest today—it pointed us toward who’s ready for tomorrow’s challenges.” This capacity to distinguish incremental improvement from transformative change positions Bradford Pa as indispensable in strategic planning and R&D investment.

Cost-Efficiency and Lifecycle Analysis: The Full Economic Picture

Financial considerations are central to any serious comparison—and Bradford Pa addresses them with uncommon depth. Cost-efficiency assessments extend far beyond upfront licensing fees, incorporating total cost of ownership (TCO) across deployment, maintenance, training, and upgrade cycles.By modeling lifecycle expenses, the framework reveals hidden costs that standard RFP processes often overlook. Consider enterprise software adoption: a departmental budget might favor a cheaper tool with limited scalability, only to face costly migrations after three years. Bradford Pa’s economic module simulates five- to ten-year scenarios, factoring in downtime risks, staff turnover, and technology obsolescence.

This lifecycle lens empowers C-suite executives to maximize value, not just expense. Real-world application of this insight emerged in a national health information system rollout, where a Bradford Pa analysis exposed a 40% savings in long-term operations by selecting a slightly pricier but modular EHR platform—reducing future customization and support burdens. Such data-driven foresight transforms budgeting from reactive cost control into proactive value engineering.

Beyond cost and capability, Bradford Pa also examines interoperability, security robustness, and compliance with evolving regulatory standards—factors increasingly critical in digital transformation. Its structured approach ensures that no single dimension dominates, preserving objectivity. As digital ecosystems grow more complex, this holistic benchmarking remains vital.

Bradford Pa’s framework, in essence, equips organizations to navigate trade-offs with clarity, prioritize sustainable innovation, and make investments that endure. In an era where speed and adaptability define competitive advantage, Bradford Pa isn’t just a tool—it’s a strategic compass.

Related Post

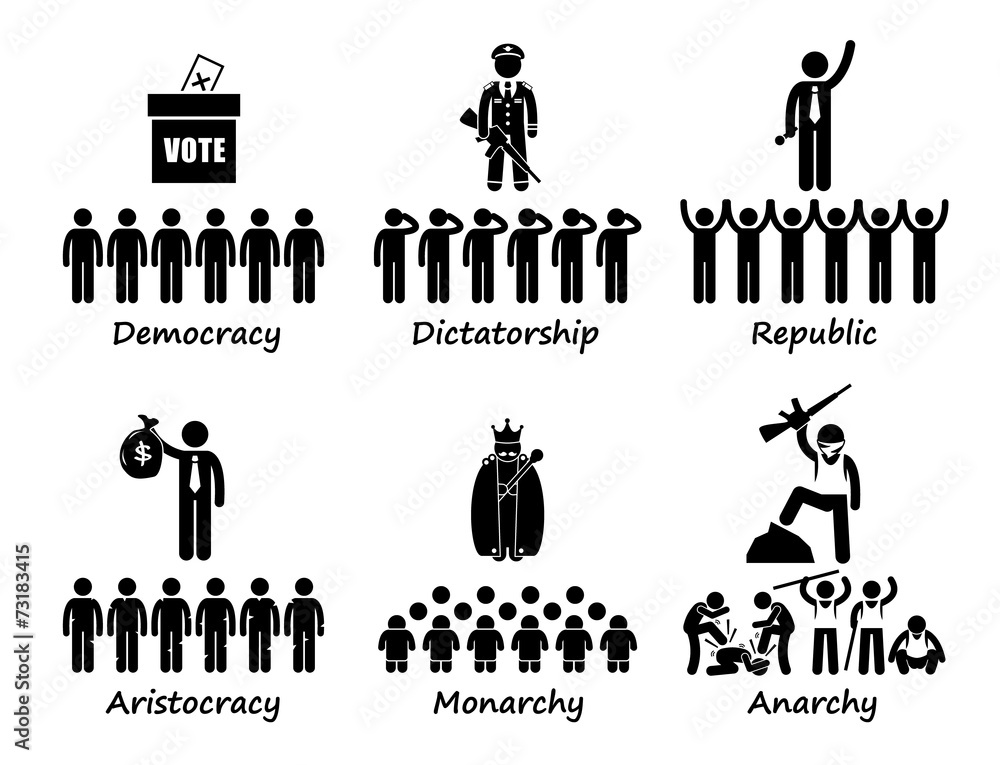

South Africa Is a Republic — Not a Monarchy, Defying Colonial Roots and Embracing Democratic Foundations

I Love You Too – El Corazón Que Nunca Secallo

Izometa Infusion: A Comprehensive Look at Its Benefits, Proper Dosage, and Manageable Side Effects

Tryhardguide’s Ultimate Typing Mastery: How to Train Like a Pro and Dominate Digital Anxiety